Introduction

Oracle Digital Assistant (ODA) now incorporates generative AI features such as summarization, sentiment analysis, and conversational interactions within dialog flows using the new Invoke Large Language Model (LLM) component. This blog will explore scenarios where ODA leverages LLM blocks to deliver human-like responses to user queries, particularly when interacting with Fusion Application data. Let’s first take a look at FA Digital Assistant and the necessary configuration steps to enable LLM services within ODA.

FA Digital Assistant

Oracle’s FADigitalAssistant, part of the Oracle Fusion Applications suite, is an AI-powered enterprise conversational assistant designed to improve user productivity and engagement across business functions. It allows users to interact with the Fusion Applications using natural language, both spoken and typed, making complex tasks simpler and faster to complete.

The FADigitalAssistant offers a wide range of prebuilt skills designed to improve efficiency in various business processes. These skills are available across multiple Oracle Fusion modules, such as Human Capital Management (HCM), Enterprise Resource Planning (ERP), Supply Chain Management (SCM).

- Employees can check their paychecks, PTO/vacation balances, and benefits.

- Manage expenses, including submitting expense reports through conversational inputs like photos of receipts.

Customers can expand the functionality of these skills by extending or cloning them to add additional features.

LLM Integration using OCI Generative AI

Oracle Digital Assistant (ODA) now supports large language models (LLMs), enabling you to enhance skills with advanced generative AI functionalities. While ODA offers pre-built templates for various LLMs (Cohere, OpenAI, Llama, etc.), you have the flexibility to integrate any LLM of your choice. In this blog, we will focus on integrating LLMs using the OCI Generative AI service in a few simple steps.

Step1: Policy Details

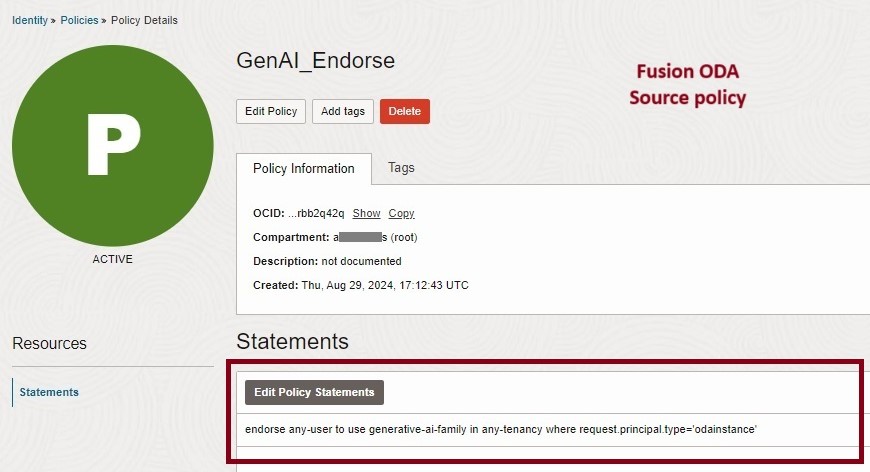

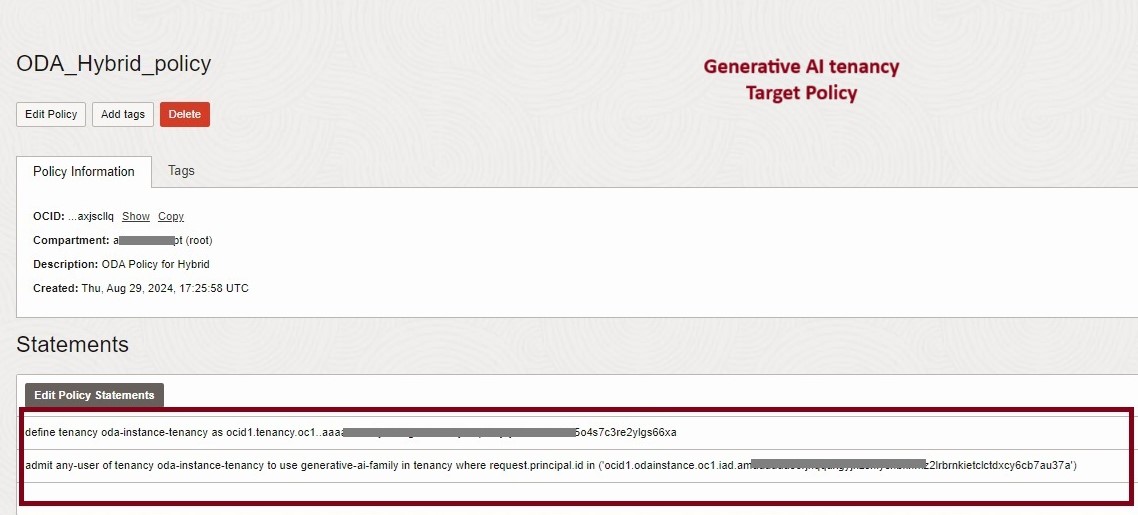

We need to define the source and target tenancy policies for Fusion Digital assistant to be able to access and invoke the Generative AI services. The ODA documentation LLM Services provides details on various policies needed for various topologies. In this blog we will specifically see how to grant access to Fusion SaaS paired digital assistant (the source service) to invoke generative AI service (the target service) hosted in a separate tenancy, e.g a PaaS tenancy.

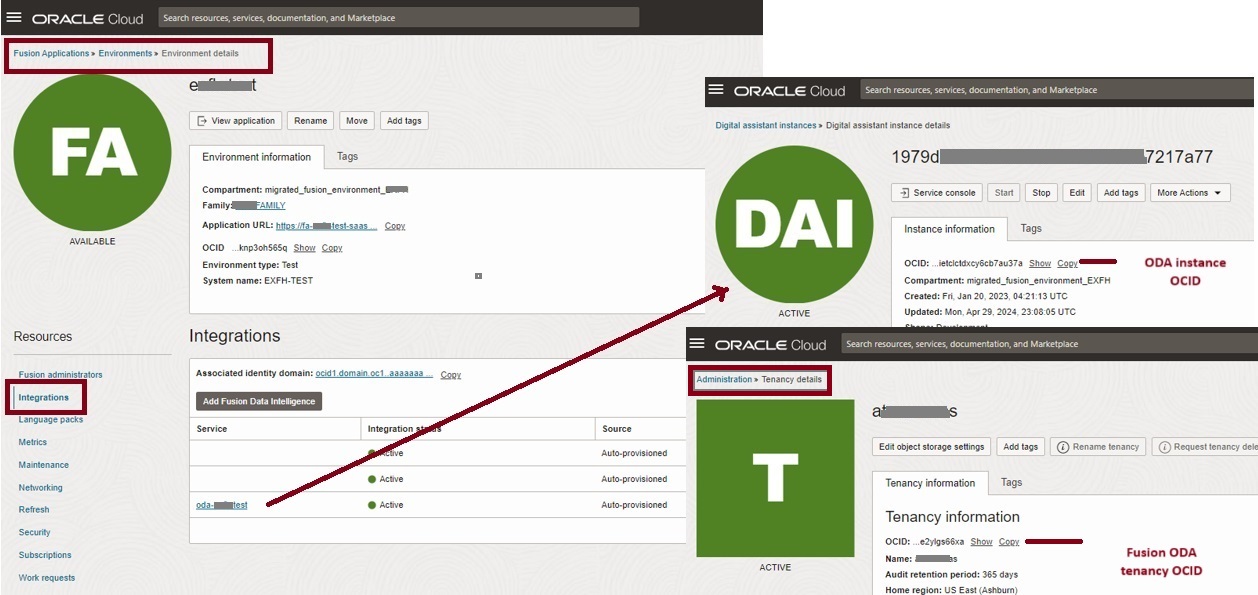

Artifacts needed are as follows.

- OCID of the Fusion ODA instance

- OCID of the Fusion tenancy

These above OCID values can be obtained from Fusion SaaS tenancy OCI Console as shown below. In cases where Fusion SaaS OCI console access is not available, the same can be obtained by raising a support SR.

Once the OCIDs are obtained, the policies can be added to source and target tenancies as shown below.

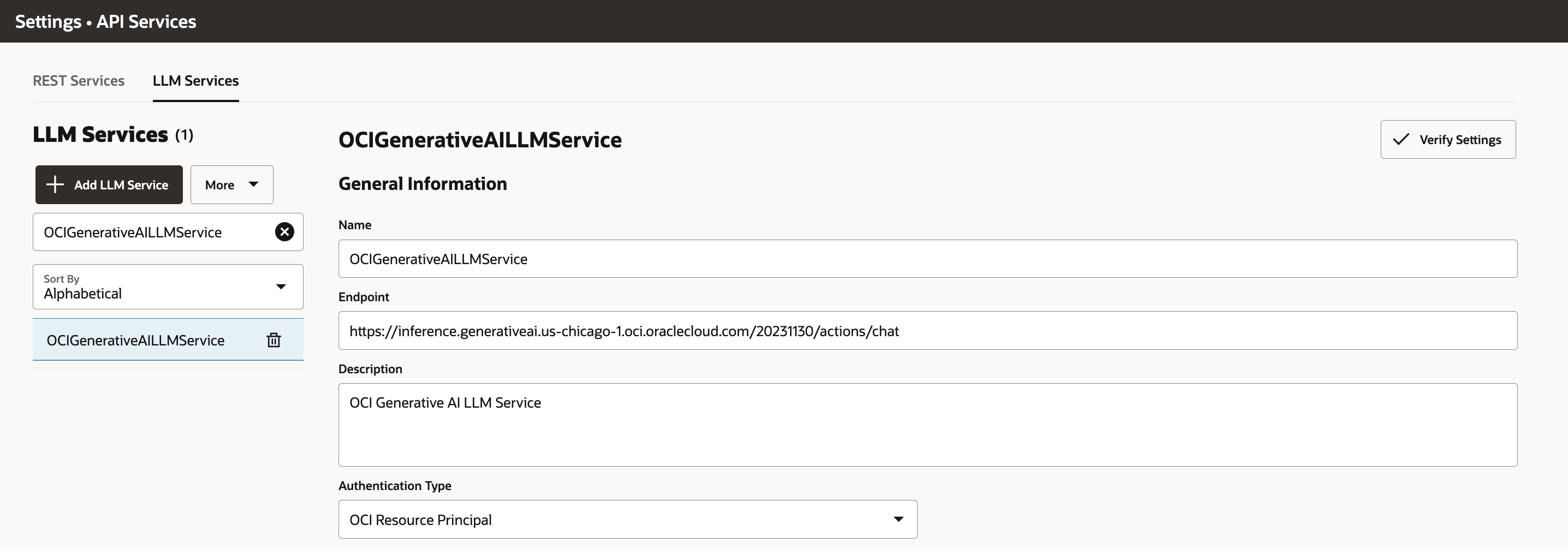

Step2: LLM Services – REST API

After setting the necessary policies, the next step is to create a service by navigating to Select > Settings > API Services that allows Oracle Digital Assistant to access the LLM provider’s endpoint. At the time of writing this blog, the OCI Generative AI service is available in the Chicago, Frankfurt, London, and São Paulo regions. In this blog, we will integrate the OCI Generative AI chat endpoint using the latest model, “cohere.command-r-plus“. It is designed specifically for conversational interactions and tasks involving long contexts. It supports a context length of up to 128K tokens.

Sample Request Payload for Testing the added LLM Service, update the compartment id as per your access

{

"compartmentId": "ocid1.tenancy.oc1..",

"servingMode": {

"modelId": "cohere.command-r-plus",

"servingType": "ON_DEMAND"

},

"chatRequest": {

"message": "Tell me something about the Oracle Digital Assistant.",

"maxTokens": 1000,

"isStream": false,

"apiFormat": "COHERE",

"frequencyPenalty": 1,

"presencePenalty": 0,

"temperature": 0.75,

"topP": 0.7,

"topK": 1

}

}

Step3: LLM Transformation Handlers

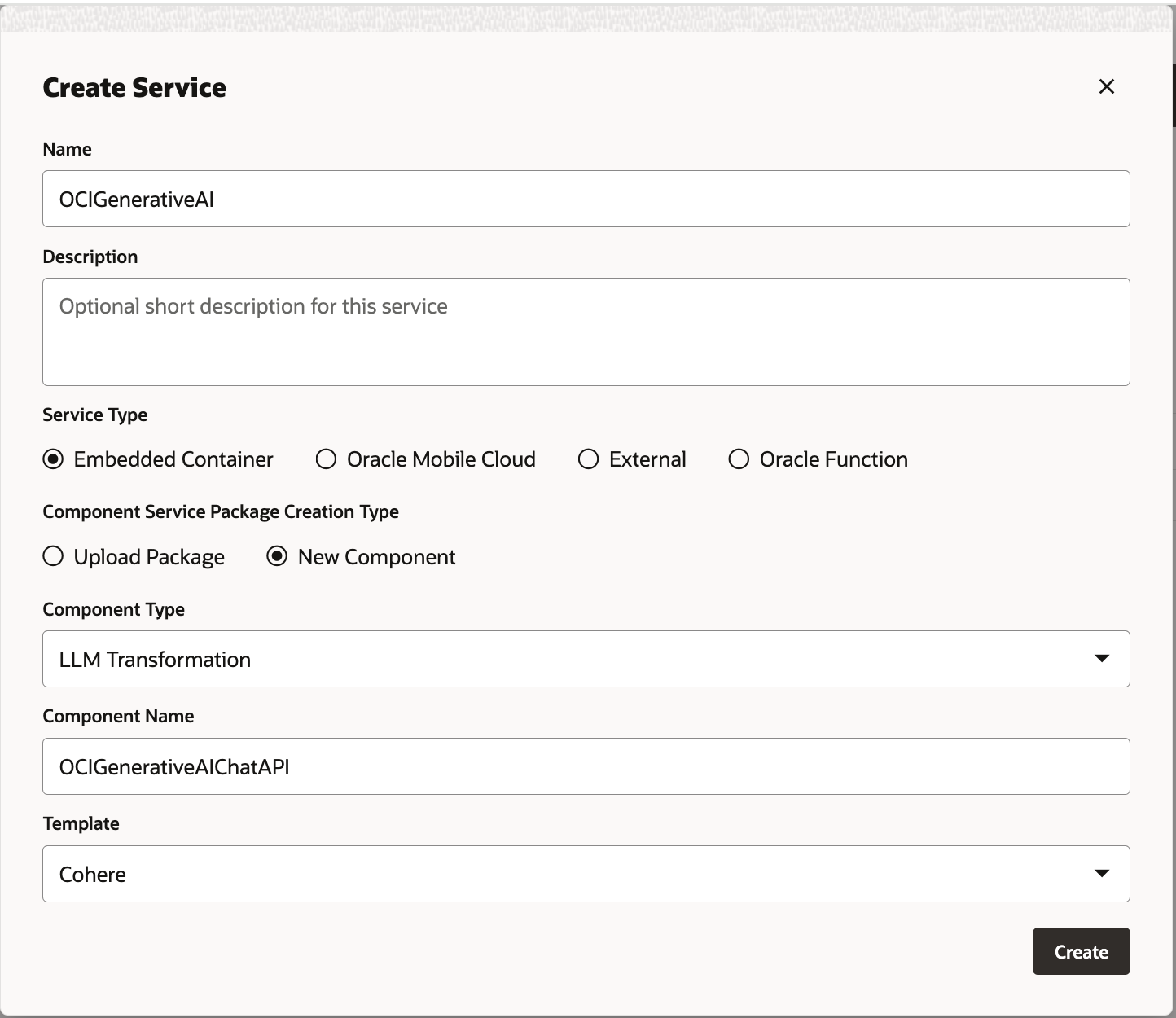

The LLM transformation handlers can be created by navigating to Components > Services > Add Service within skill.

You can reference the OCI Generative AI request, response, and error transformation handlers for Cohere and Llama modules through this link. We have created the transformation handler for OCI Generative AI chat endpoint using the latest model, “cohere.command-r-plus“.

Transformation Handlers for OCI Generative AI Chat Endpoint

module.exports = {

metadata: {

name: 'OCIGenerativeAIChatAPI',

eventHandlerType: 'LlmTransformation'

},

handlers: {

transformRequestPayload: async (event, context) => {

context.logger().info("transformRequestPayload");

// Cohere doesn't support chat completions, so we first print the system prompt, and if there

// are additional chat entries, we add these to the system prompt under the heading CONVERSATION HISTORY

let prompt = event.payload.messages[0].content;

if (event.payload.messages.length > 1) {

let history = event.payload.messages.slice(1).reduce((acc, cur) => `${acc}\n${cur.role}: ${cur.content}` , '');

prompt += `\n\nCONVERSATION HISTORY:${history}\nassistant:`

}

// using Cohere

let modelId = "cohere.command-r-plus"

let runtimeType = "COHERE";

return {

"compartmentId": "ocid1.tenancy.oc1..",

"servingMode": {

"servingType": "ON_DEMAND",

"modelId": modelId

},

"chatRequest": {

"message": prompt,

"isStream": event.payload.streamResponse,

"maxTokens": event.payload.maxTokens,

"temperature": event.payload.temperature,

"apiFormat": "COHERE",

"frequencyPenalty": 1,

"presencePenalty": 0,

"topP": 0.7,

"topK": 1

}

};

},

transformResponsePayload: async (event, context) => {

let llmPayload = {};

if (event.payload.responseItems) {

// streaming case

llmPayload.responseItems = [];

event.payload.responseItems.forEach(item => {

llmPayload.responseItems.push({"candidates": [{"content": item.text || "" }]});

});

} else {

// non-streaming

llmPayload.candidates = [{"content": event.payload.chatResponse.text}]

}

return llmPayload;

},

transformErrorResponsePayload: async (event, context) => {

const error = event.payload.message || 'unknown error';

if (error.startsWith('invalid request: total number of tokens')) {

// returning modelLengthExceeded error code will cause a retry with reduced chat history

return {"errorCode" : "modelLengthExceeded", "errorMessage": error};

} else {

return {"errorCode" : "unknown", "errorMessage": error};

}

}

}

};

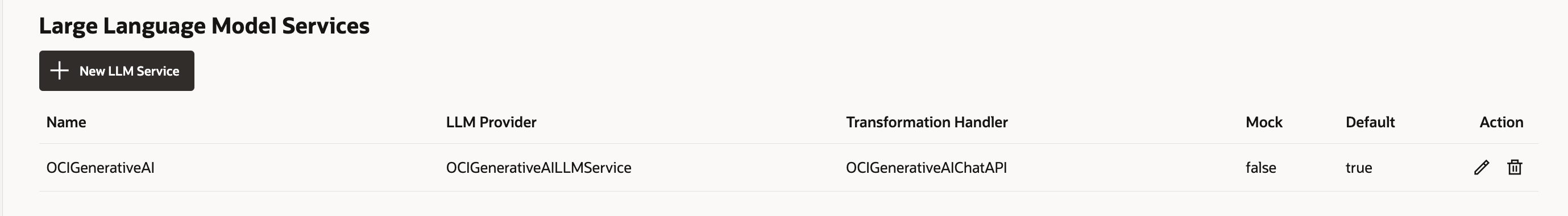

Step4: Association of LLM Service and Transformation handlers in ODA Skill

You need to associate the LLM service defined as a REST API (Step 2) with the transformation handlers (Step 3). This can be done by navigating to Settings > Configuration inside the skill and creating a new LLM service in the Large Language Model Services section, as shown in the screenshot below.

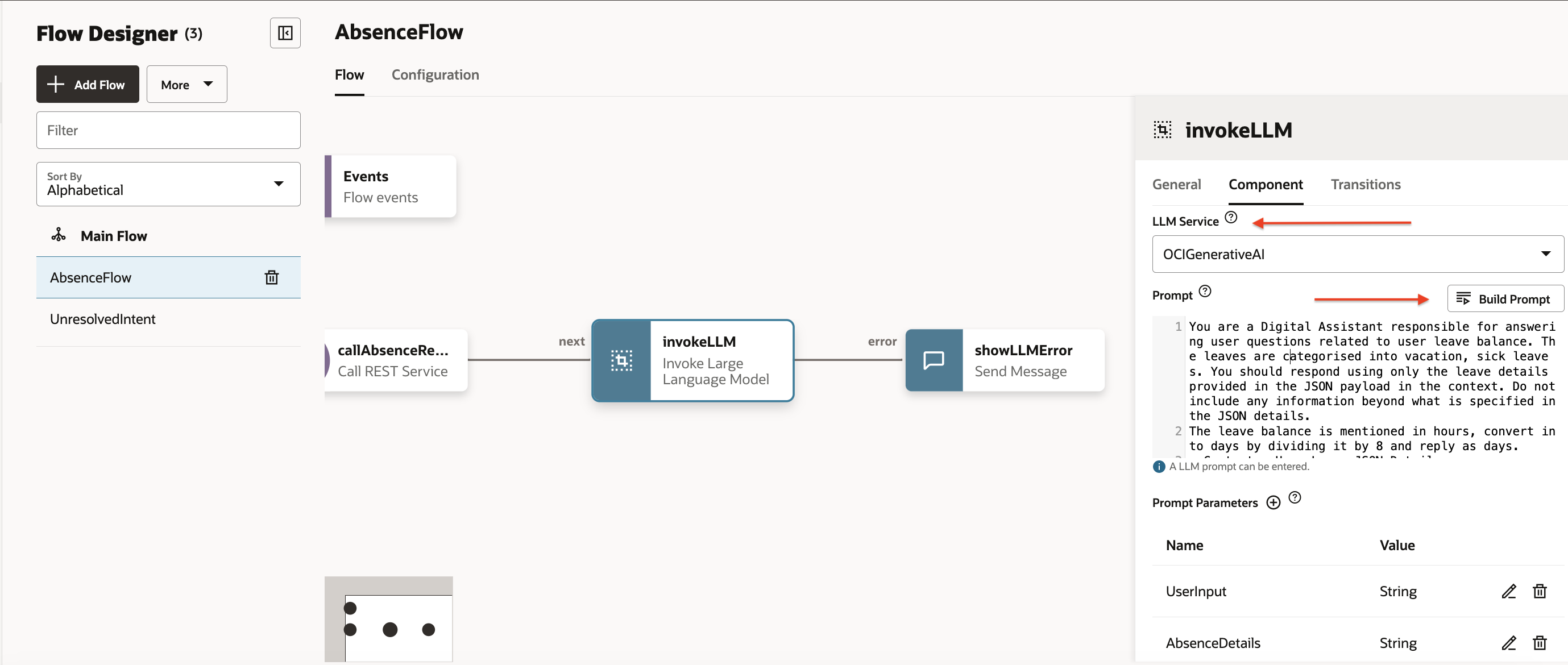

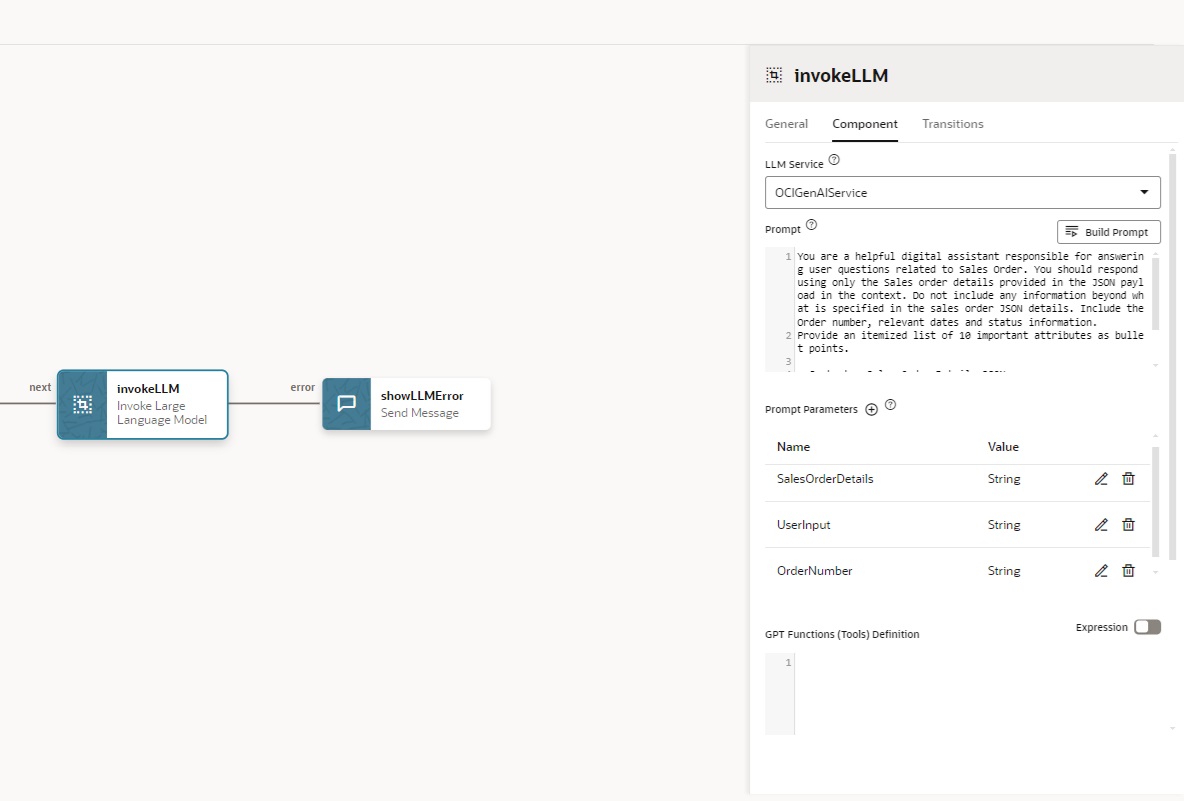

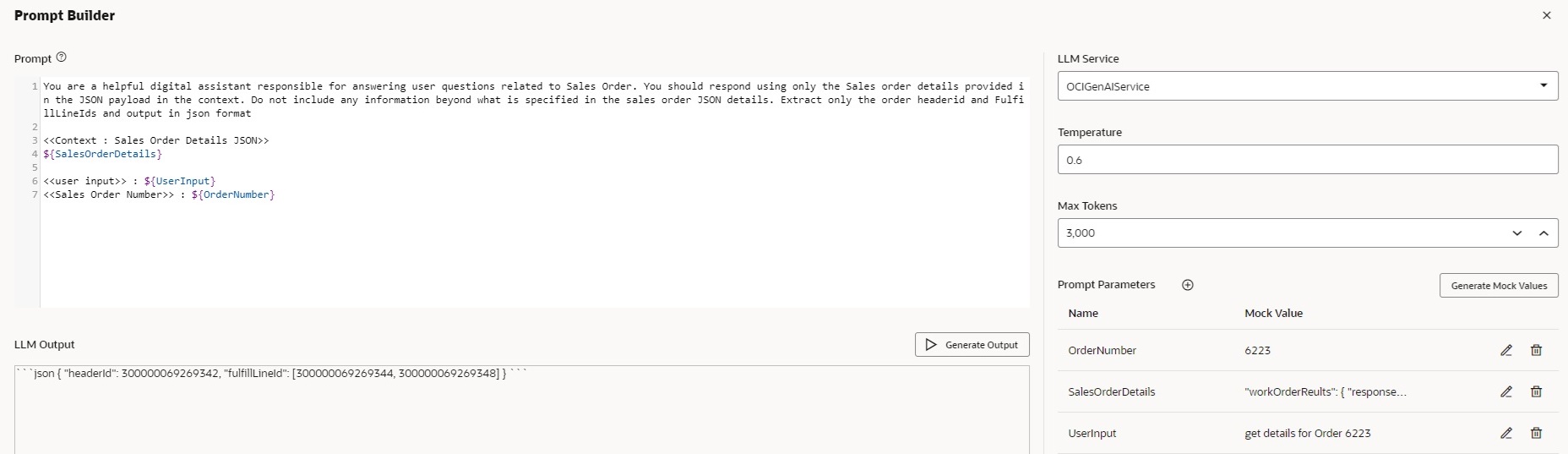

Step5: LLM Component

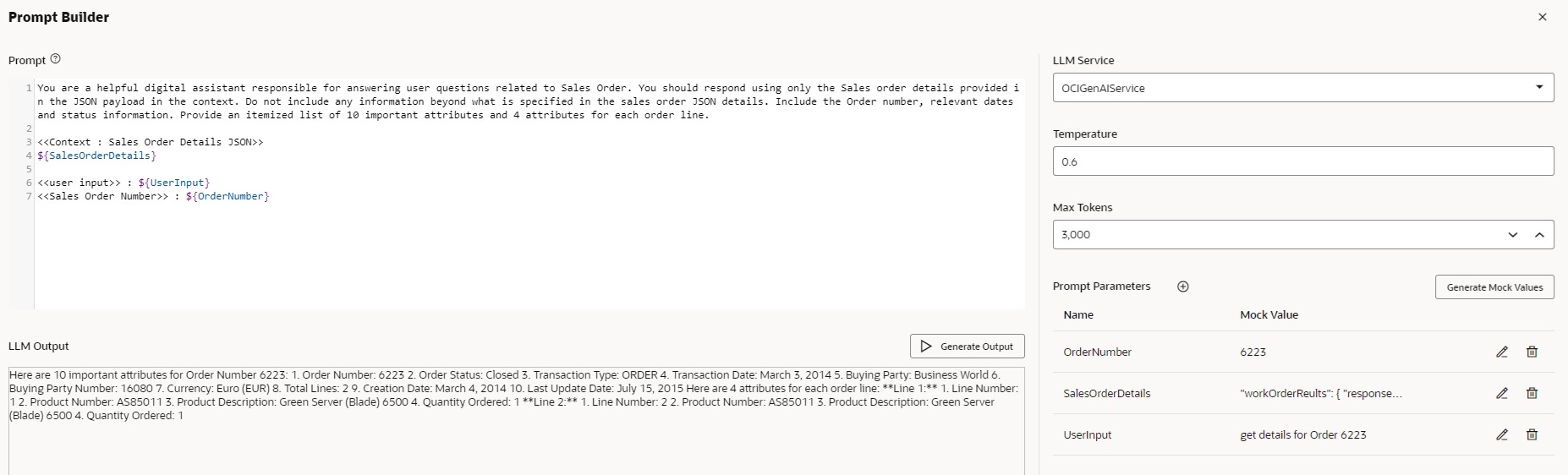

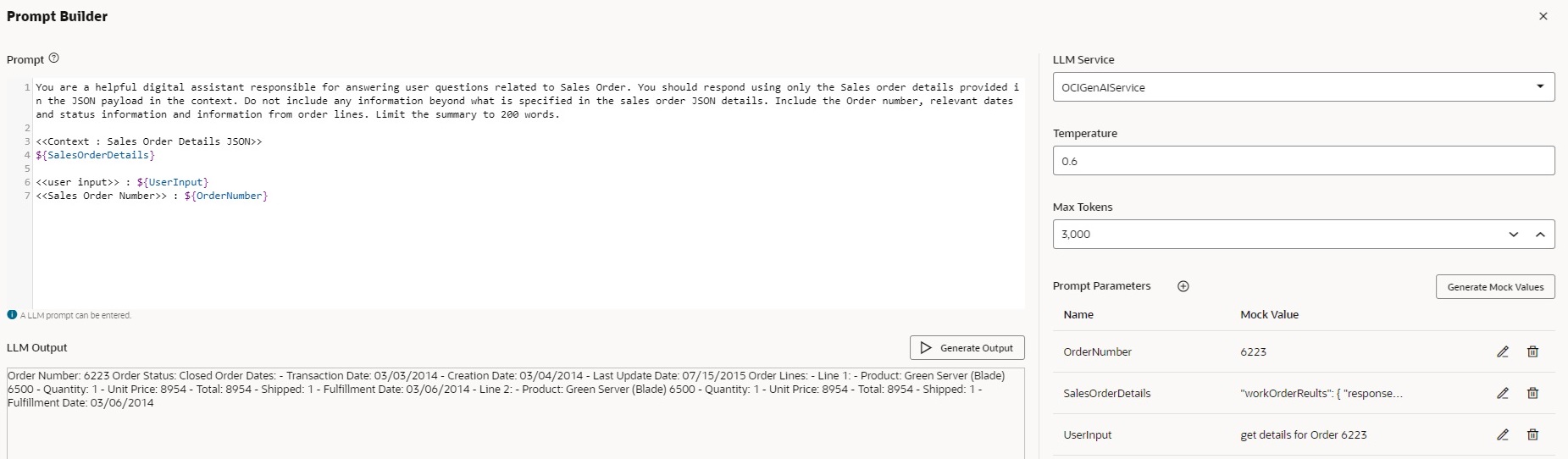

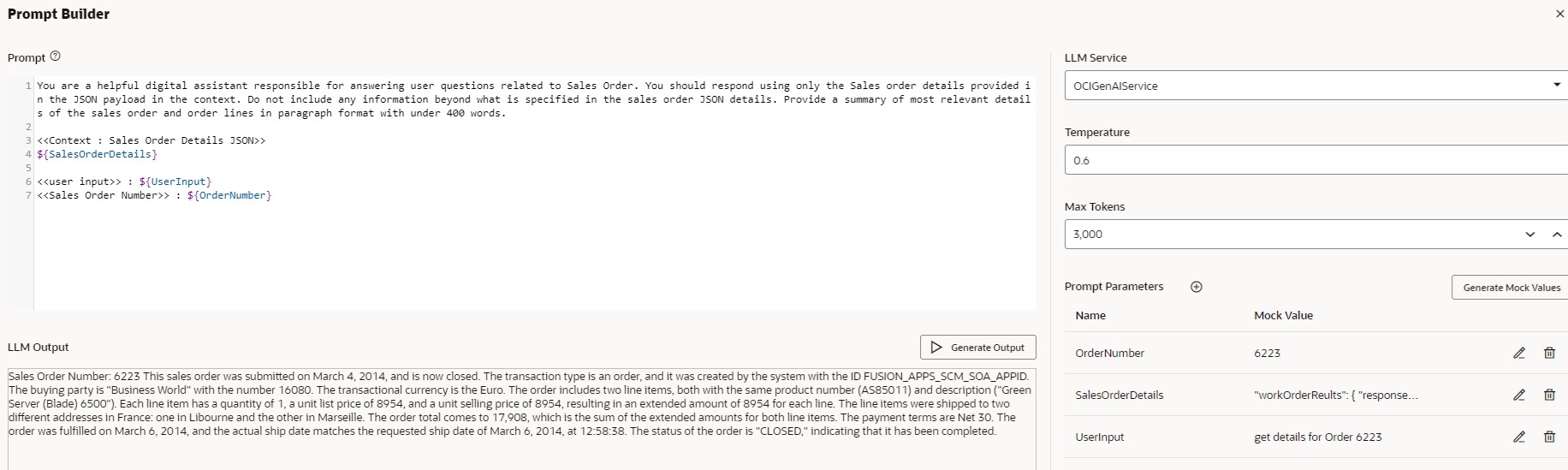

The Large Language Model (LLM) component in ODA facilitates conversations with users. You can add this component to your dialog flow by selecting Service Integration > Invoke Large Language Model from the Add State dialog then selects LLM service created in Step 4. You can create and test prompts using the prompt builder, and also assign prompt parameters by using mock values during testing. You can configure advanced options such as temperature, token limit, and enable response streaming by setting the “Use Streaming” parameter to True. The LLM component’s built-in validation offers protection against vulnerabilities but If you wish to strengthen the existing validation, you can configure custom request and response validation handlers. For further details, click on this link.

Now let us look at a sample use case for using the ODA’s Generative AI capability for Fusion SaaS application.

Fusion Sales Order Assistant

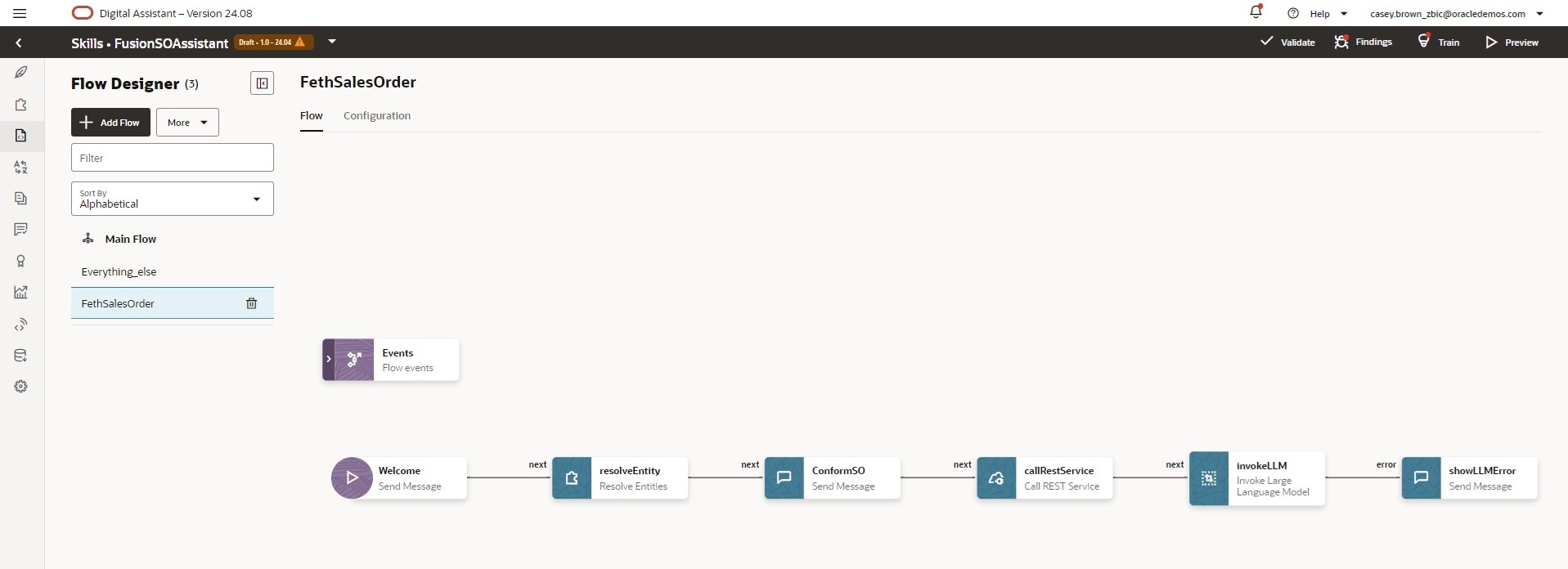

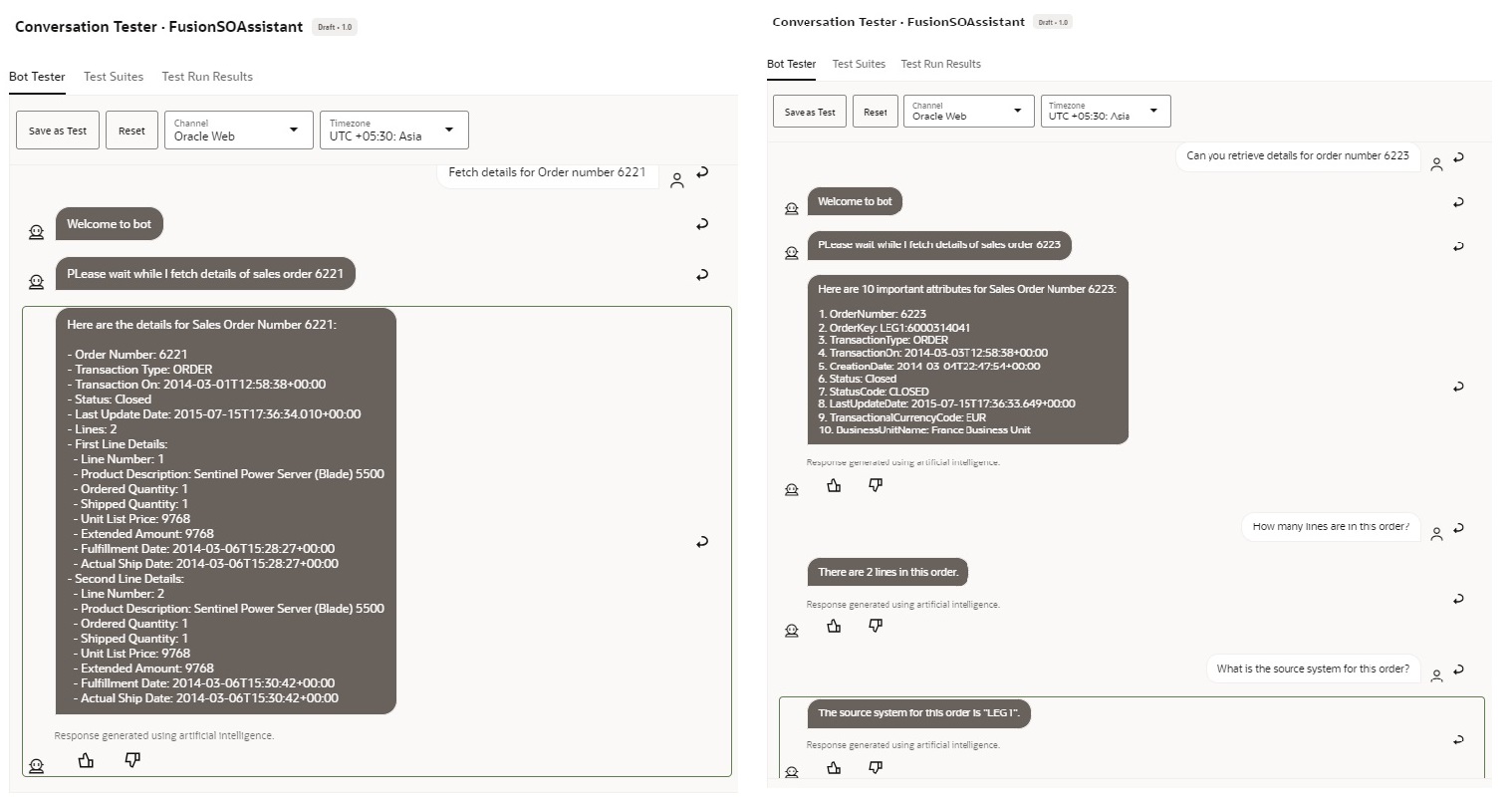

This use case involves using the Generative AI LLM component for summarizing the Sales order details in human friendly format. This could be useful for sales order operators wishing to refer to the sales order details on their mobile devices on the go.

Diagram below shows a simple ODA Visual designer flow.

In summary, the ODA conversation flow resolves the Sales Order number entity from user conversation and uses it to retrieve sales order details using the REST Service component. The REST Service uses the Fusion SCM Order Management’s SalesOrderHub REST API. As we know the sales order details returned are in json format and contain a lot of details. An LLM Invoke component is introduced in the ODA flow for summarizing the Sales order to display the most relevant details in natural language. The LLM Invoke component uses the LLM service and Transformation handlers we created in previous section to seamlessly integrate in the conversational flow of ODA. In this usecase the LLM component receives Orderdetails json as context and summarizes the most relevant details of the sales order as configured using the LLM prompt.

The output format and verbosity of the summarization can be modified by tuning the prompt to LLM component. The LLM component provides a prompt builder which can be used for tuning the prompts and responses at design-time.See below examples of various outputs generated by LLM component based on prompt variations as required for different use cases.

Finally shown below are some are some sample ODA user interactions with the chatbot using the Fusion Sales Order Assistant skill.

Conclusion

As we have seen, it is quite easy to design intuitive and helpful chatbot interactions by combining the conversation flow of ODA with the power of LLM capabilities.These can be particularly useful for a variety of Fusion Digital assistant use cases where new skills can be added to leverage the LLM features to simplify and enrich the user experience for Fusion SaaS users.